Optimizing compilation and execution for dynamic languages

Published by marco on

The long and very technical article Introducing the WebKit FTL JIT provides a fascinating and in-depth look at how a modern execution engine optimizes code for a highly dynamic language like JavaScript.

To make a long story short: the compiler(s) and execution engine optimize by profiling and analyzing code and lowering it to runtimes of ever decreasing abstraction to run as the least dynamic version possible.

A brief history lesson

What does it mean to “lower” code? A programming language has a given level of abstraction and expressiveness. Generally, the more expressive it is, the more abstracted it is from code that can actually be run in hardware. A compiler transforms or translates from one language to another.

When people started programming machines, they used punch cards. Punch cards did not require any compilation because the programmer was directly speaking the language that the computer understood.

The first layer of abstraction that most of us—older programmers—encountered was assembly language, or assembler. Assembly code still has a more-or-less one-to-one correspondence between instructions and machine-language codes but there is a bit of abstraction in that there are identifiers and op-codes that are more human-readable.

Procedural languages introduced more types of statements like loops and conditions. At the same time, the syntax was abstracted further from assembler and machine code to make it easier to express more complex concepts in a more understandable manner.

At this point, the assembler (which assembled instructions into machine op-codes) became a compile which “compiled” a set of instructions from the more abstract language. A compiler made decisions about how to translate these concepts, and could make optimization decisions based on registers, volatility and other settings.

In time, we’d graduated to functional, statically typed and/or object-oriented languages, with much higher levels of abstraction and much more sophisticated compilers.

Generally, a compiler still used assembly language as an intermediate format, which some may remember from their days working with C++ or Pascal compilers and debuggers. In fact, .NET languages are also compiled to IL—the “Intermediate Language”—which corresponds to the instruction set that the .NET runtime exposes. The runtime compiles IL to the underlying machine code for its processor, usually in a process called JIT—Just-In-Time compilation. That is, in .NET, you start with C#, for example, which the compiler transforms to IL, which is, in turn, transformed to assembler and then machine code by the .NET runtime.

Static vs. Dynamic compilation

A compiler and execution engine for a statically typed language can make assumptions about the types of variables. The set of possible types is known in advance and types can be checked very quickly in cases where it’s even necessary. That is, the statically typed nature of the language allows the compiler to reason about a given program without making assumptions. Certain features of a program can be proven to be true. A runtime for a statically typed language can often avoid type checks entirely. It benefits from a significant performance boost without sacrificing any runtime safety.

The main characteristic of a dynamic language like JavaScript is that variables do not have a fixed type. Generated code must be ready for any eventuality and must be capable of highly dynamic dispatch. The generated code is highly virtualized. Such a runtime will execute much more slowly than a comparable statically compiled program.

Profile-driven compilation

Enter the profile-driven compiler, introduced in WebKit. From the article,

“The only a priori assumption about web content that our engine makes is that past execution frequency of individual functions is a good predictor for those functions’ future execution frequency.”

Here a “function” corresponds to a particular overload of a set of instructions called with parameters with a specific set of types. That is, suppose a JavaScript function is declared with one parameter and is called once with a string and 100 times with an integer. WebKit considers this to be two function overloads and will (possibly) elect to optimize the second one because it is called much more frequently. The first overload will still handle all possible types, including strings. In this way, all possible code paths are still possible, but the most heavily used paths are more highly optimized.

“All of the performance is from the DFG’s type inference and LLVM’s low-level optimizing power. […]

“Profile-driven compilation implies that we might invoke an optimizing compiler while the function is running and we may want to transfer the function’s execution into optimized code in the middle of a loop; to our knowledge the FTL is the first compiler to do on-stack-replacement for hot-loop transfer into LLVM-compiled code.”

Depending on the level of optimization, the code contains the following broad sections:

- Original: code that corresponds to instructions written by the author

- Profiling: code to analyze which types actually appear in a given code path

- Switching: code to determine when a function has been executed often enough to warrant further optimization

- Bailout code to abandon an optimization level if any of the assumptions made at that level no longer apply

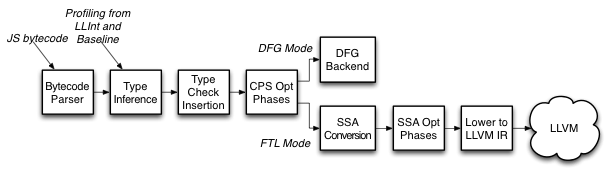

FTL Pipeline

FTL Pipeline

While WebKit has included some form of profile-driven compilation for quite some time, the upcoming version is the first to carry the same optimization to LLVM-generated machine code.

I recommend reading the whole article if you’re interested in more detail, such as how they avoided LLVM compiler performance issues and how they integrated this all with the garbage collector. It’s really amazing how much that we take for granted the WebKit JS runtime treats as “hot-swappable”. The article is quite well-written and includes diagrams of the process and underlying systems.