How important is human expertise?

Published by marco on

I have a lot of questions about the rush to replacing human expertise with machine-based expertise.

The Expertise Pipeline

Do we still need expertise? If so, how do obtain it? What do we do when we saw off the branch we’re sitting on by getting rid of the first half of the pipeline that leads to the second half containing expertise?

Do we still need expertise? If so, how do obtain it? What do we do when we saw off the branch we’re sitting on by getting rid of the first half of the pipeline that leads to the second half containing expertise?

The pipeline looks roughly like this right now:

- Prime the pump with self-starters/geniuses

- Add people who learn from those pioneers/initial experts

- Those people, in turn, become experts

GOTO 2

What happens when we strip out step two because it’s more cost-effective not to waste time and money training noobs. How will we create future experts? This short-term thinking might break a machine that will take a generation or two to start up again.

Quality and Mediocrity

What level of quality are we interested in? Which use cases do we have? Which stakeholders? Which stakeholders need which levels of quality for which use cases?

Are we accepting a quick-cash-grab mediocre quality now, promising ourselves we’ll get better quality later?

Are we allowing the quick-cash/get-out mentality to affect what we create? Are we sacrificing durability?

We’ve done it all before. A lot. We’ve sacrificed a lot on the altar of profitability.

Will we forget that we’re making mediocrity? You know, relative to what we used to strive for?

This has already happened in so many places, as the grinding gears of capitalism find the least-bad thing that people are willing to accept. When they realize it’s not good enough, it’s too late. No-one is making good things anymore. You’re stuck with mediocrity.

Does it break in one year?

Buy a new one.

What if you want one that lasts ten years?

Too bad.

What if you hate that it wastes resources and pollutes the environment?

The market doesn’t care, otherwise that product would exist.

Maintenance, Testing, Refactoring

Do we still need the things that led us to develop the techniques we used when we were still building and modifying things ourselves?

This goes to the heart of what I’ve learned over three decades in software development in all capacities (developer, architect, manager, etc.). It’s easy to think that the instincts that you’ve built up are unassailable, that they’re always going to apply. I’m talking about techniques like testing, architecture, documentation—the whole shebang. If you’re honest, though, you’ll accept that the necessity of those techniques is contingent on certain axioms, axioms that may no longer apply.

This is just a though experiment. If you have a tool that just creates what you need from a few instructions, then the approach to maintenance might be considerably different, no? If you never need to look into the guts of what’s been built, then…then what do you need unit tests for? Why would you need an architecture? Why write developer documentation? You’re never going to touch that code again.

If you need to modify the behavior of the system, you use your original prompt plus whatever adjustments you need—and generate a whole new system from whole cloth. You just throw away the old version. You don’t need to maintain it, you don’t need to adjust it, you don’t even need to understand it.

Sounds great, right? 🙌

I have concerns. Perhaps we can allay them. Perhaps not. I think they’re valid.

Who needs testing?

In code, for example, we focus on testability so that we can refactor, and improve, and extend. What if the code doesn’t need to be improved or extended? What happens then? Do we still need tests? How do you know it does what you’ve been told it does? Are you testing everything manually? Are you testing it at all? Or are you just trusting that it works? Are you just trusting that your prompt-Svengali got it just right? Or are you also generating your tests with your LLM?

You may no longer need unit or integration tests, but you will still need acceptance tests, probably in the form of end-to-end tests. Maybe an LLM can write those for you, but probably not. You’ll probably need an expert, somewhere at the front of the process, where the LLM can no longer help you. You’re going to need somebody who knows what the f@%k they’re talking about, rather than a half-trained/StackOverflow noob with an eager but brain-dead LLM.

Instead of adding the ½ of a noob developer to the ½ of an LLM to get 1, we should be open to the possibility that we’re actually multiplying them to get ¼. 😉

So, you’re going to need something that knows how to think about your application domain in order to decide what an acceptable solution would be, to define the boundaries of your application domain. I’m not sure anyone is proposing that the current crop of LLMs are even tending in this direction.

Human experts to the rescue. Let’s hope we still have a system that knows how to create them reliably.

Who needs requirements?

Talking about acceptance tests leads to asking “what are we building? What does it do?”

We need requirements.

Where do requirements come from in a world without human experts? The toughest job in a project is figuring out what you want—and then specifying it. You need all of that before you get an LLM involved. Otherwise, what are you building?

The LLM will help you build the thing that it thinks you want from your vague prompts. You, in turn, will be willing to round up whatever it provides you to the thing you thought you needed, just so you can be done more quickly, and increase your personal profit margin.

You’ll also have to do it because you’ll have no other choice and your boss is riding your ass for KPIs. You can only hope that quality expectations have dipped enough to meet you in the realm of mediocrity where your solution lies.

You’ll also have to do it because you’ll have no other choice and your boss is riding your ass for KPIs. You can only hope that quality expectations have dipped enough to meet you in the realm of mediocrity where your solution lies.

Product-development Cycle

Let’s go into a bit more detail on what the product-development cycle looks like.

If we’ve generated your software with an LLM, then we’d better hope that the LLM keeps helping you because, as noted above … if we do want to modify the solution, then how do we do that? Do we go back to the original prompts? Or do we feed it the current version and ask it to update it? Does this work? How do we formulate the request precisely enough? Especially when we no longer have people trained to think precisely?

There are a lot of things that we’ve learned to do and which we’ve added to our programming languages to allow certain features, like extensibility, etc. The SOLID principles. Do those still apply? If not, why not? Or, to be clearer, to which projects do they still apply? If it’s so easy to replace so much code with an LLM’s hallucinations, then were we over-engineering before? If no, then why would we throw away all of our techniques now?

Do we still need documentation? Manuals? Tutorials? If we don’t have people learning how to code, who will maintain the LLMs? Who will build the next generation? Will they build themselves?

Thinking about LLM output

How can I extend the product of a 🤖? Can I get at the source? How understandable is the output? How well do I know the area? Can I judge the quality? How well can I verify that the output matches my requirements?

What is the output of these LLMs? What can we do with it? What do we want to do with it? If we want humans to be able to extend it, if what LLMs produce are just building blocks, then the output has to continue to be manipulable. If not … then it can be anything, like just an EXE, right? Right now, it generates code that the “developers” who requested it don’t really understand. Why not just generate binary code?

That’s kind of how image generators are right now. They produce a JPEG, not a PSD. There is no source to speak of, no easily updated layers containing the various parts. Existing tools don’t allow you to work with that kind of output yet, except poking around the outsides of a black box.

GIGO

This is how a lot of people program now, which I think is why doing it with an LLM is so appealing. Their jobs will not change one bit. They didn’t understand how things worked before and they don’t understand now, but they’re faster at it.

Will we have to deal with the flotsam and jetsam produced by this age? How? Does it matter?

Or can we just throw it all away and start over fresh each time? Does that scale?

Seriously, I’m looking at the ERP system we have. It’s a usability and functional nightmare. I’ve never seen the like. This is what we feed the LLM.

I just had a PowerPoint open. I scrolled on it. It asked me where I wanted to save my changes.

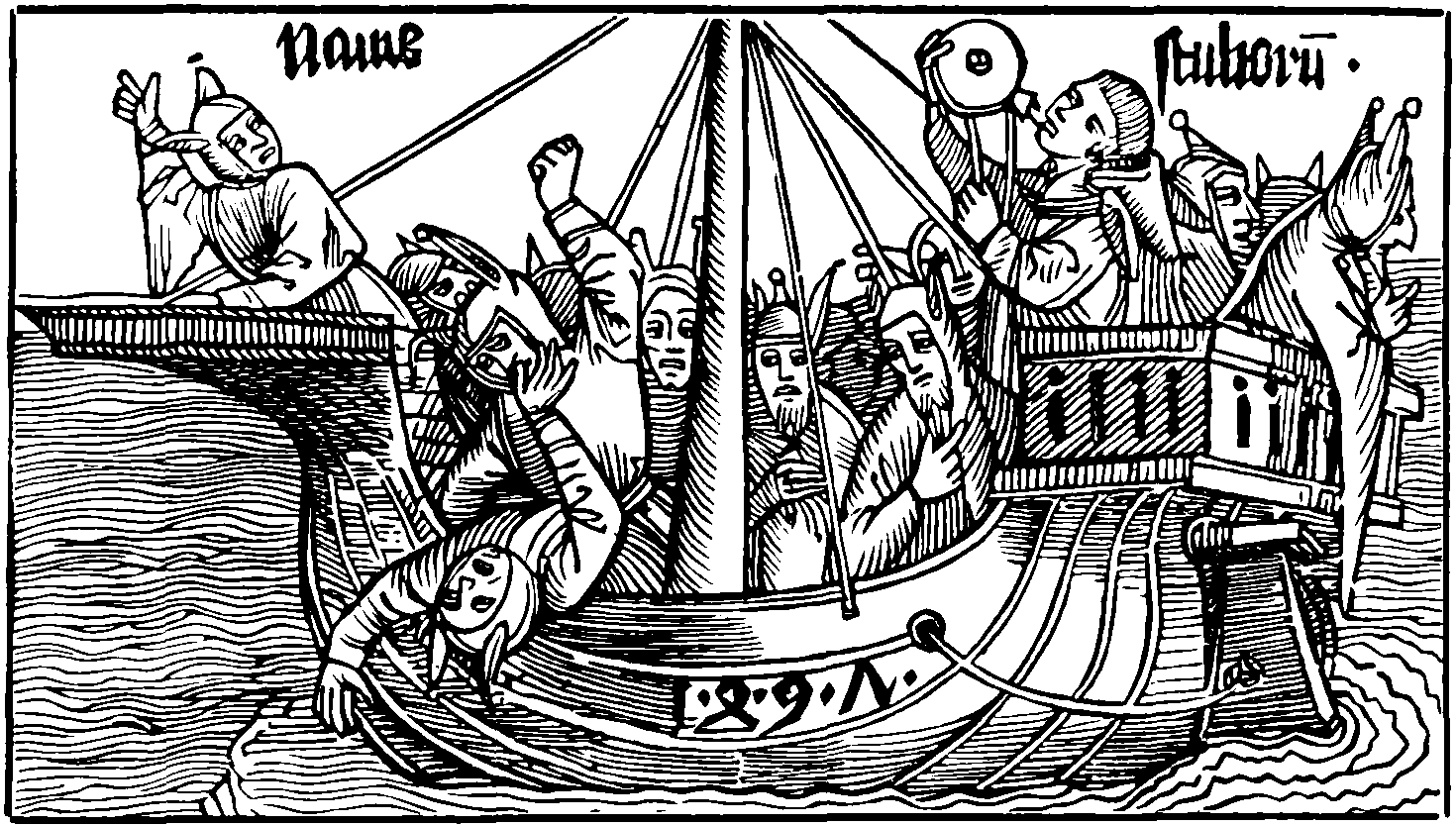

Will LLMs help us fix this? I don’t see how. All they will do is help crowd this ship of fools even more.

Ship of Fools by Sebastian Brandt

Ship of Fools by Sebastian Brandt

Updating outdated techniques

How do you get it to give you cutting-edge CSS solutions? Like, does it just return flexbox stuff? Or can it do grid stuff? Can it give you something responsive, you know, using the glorious elegance and flexibility that the CSS designers built? Or does it just give you the flexbox or grid equivalent of an absolutely positioned mess of floats or tables from the old days?

Have we reached the end of the line with CSS usage? If no-one learns how to use the newer, cooler, more powerful stuff, then who will write the stuff that feeds the LLM so that it can suggest it to those who don’t know it? Where does the content come from if everyone’s using an AI to generate content? A copy of a copy … well, science-fiction authors have shown us for years where that ends up.

Michael Keaton in Multiplicity − Copy of a copy of a copy

Michael Keaton in Multiplicity − Copy of a copy of a copy

We already have a lot of bad software today, written by mediocre programmers with questionable technique and no thought of maintainability. All of that was fed into the engine that now helps worse programmers become mediocre. Where will the good code come from? Magic?

An LLM is like an e-bike. You’ll never go faster than 25kph with it. It’s like swipe-typing. It will never be as fast as typing on an actual desktop keyboard. It’s like mobile devices: you can’t program there. Not really.

How do you learn how to debug? To pay attention? How do you learn how to correct it?

Who’s priming future LLMs?

Who’s going to use amazing tools like, e.g., named gridlines in CSS?

Don’t need ‘em! it can all just be generated because humans don’t need to edit it! It’ll be like assembler code!

Really, though? I don’t think the comparison is apt. They’re semantically different jobs. Who’s going to write the CSS versions that are responsive and maintainable and performant? Not many people have written those yet.

The LLM can’t extrapolate them from the help docs because there isn’t enough source material to make its way into a suggestion. The probabilities won’t get high enough to outweigh the sheer bulk of all of the mediocre shit that ends up tempting its way into the LLM’s answer.

The final brain-drain

We have spent decades building tools that help us build stuff that is more efficient and easier to understand and more powerful, all at the same time. But most people never learned how to use these tools or techniques. But some did. And they made amazing things.

Where will those people come from if we brain-drain everyone into using AI instead?

I worry so much about the tyranny of lowered expectations.

Right now, we have amazing people building our standards, building the browsers that implement those standards, etc. etc. Where do those people come from in the future if there’s no pipeline to teach them? Where do they gain experience if there’s no room for them in organizations? To learn?

Because here’s where the rubber meets the road: the problem is not that AI exists. It’s that capitalism exists, it’s that neo-liberal, late-stage capitalism[1] doesn’t have an answer for “what if we don’t need people to do the easy shit anymore?”

Celebrating obsolescence

I saw an article the other day on a socialist web site that couldn’t even celebrate when robots were poised to replace a whole slew of backbreaking jobs. This should be a great thing! But we know that the system we have will just drop those people like a hot rock. A compassionate system/society would, before making people’s livelihoods obsolete, be careful to think about what happens to those people.

In the case of programmers, we have to legitimately worry about how we produce the minds that will continue to produce the bounty of miracles that have now cut off the possibility of producing the kind of mind that created the first generation of tools.

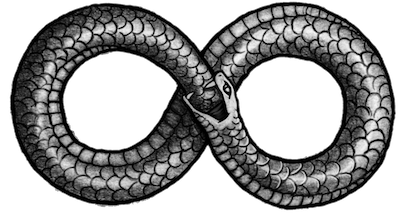

I know, it’s a mouthful. I’ve read it a few times and I’m pretty sure it says what I want it to say. Let’s put Ouroboros in here again.

Capitalism ruins everything

We’ve got “AIs” that can look at an image of a UI and build something that does what it thinks you want from it. I’m sure those aren’t cherry-picked at all. We also have “AI vision systems” that can detect faces, and whether eyebrows are arched, and so on. We have text-generators and voice simulators.

We no longer believe in vaccines, we have billions in poverty, but we have AI toys for the 1% to amuse themselves.

We need to make sure we have a plan for continued innovation and improvement. We need to be sure that we know not only what we’re gaining, but what we’re losing—and that we’re OK with that.

We need to be sure we’re not sawing off the branch we’re sitting on.

Of course, we’re not going to do any of that. Hell no.

Instead, we’ll let the greediest and most short-sighted of us decide—and then see what happens.